Difference between revisions of "TDSM 10.11"

Caitaozhan (talk | contribs) (knn classification all positive.) |

Anjul.tyagi (talk | contribs) |

||

| Line 4: | Line 4: | ||

Imagine a Voronoi diagram, where every cell contains exactly one target point. Each cell will occupy an area in the total space. So, of course, the negative points will occupy some area. If the new examples fall into those area occupied by negative point cells, then the new examples will be classified as negative. | Imagine a Voronoi diagram, where every cell contains exactly one target point. Each cell will occupy an area in the total space. So, of course, the negative points will occupy some area. If the new examples fall into those area occupied by negative point cells, then the new examples will be classified as negative. | ||

| + | |||

| + | Edit( I think the answer is yes, because if all the new data points lie near to the positive points, all will be labeled as positives. So, the decision is irrespective of the number of data points in the training set). | ||

Latest revision as of 05:13, 12 December 2017

(a)

Impossible.

Imagine a Voronoi diagram, where every cell contains exactly one target point. Each cell will occupy an area in the total space. So, of course, the negative points will occupy some area. If the new examples fall into those area occupied by negative point cells, then the new examples will be classified as negative.

Edit( I think the answer is yes, because if all the new data points lie near to the positive points, all will be labeled as positives. So, the decision is irrespective of the number of data points in the training set).

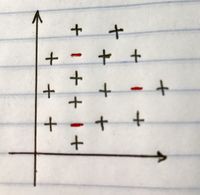

(b)

Possible.

Consider this case, where there are only three negative points. Each of the negative points is surrounded by a bunch of positive points. When new examples arrive, there is obviously no way they can be classified as negative when k=3.